Robots.txt is a small, but powerful file that tells search engine bots how to interact with your website. It’s like a guidebook, showing them which pages to visit (index) and which ones to skip (ignore). A well-set-up Robots.txt can help your website’s SEO. Actually it’s not just for PrestaShop, it can be found on any site. But in this post we will discuss how it works in PrestaShop.

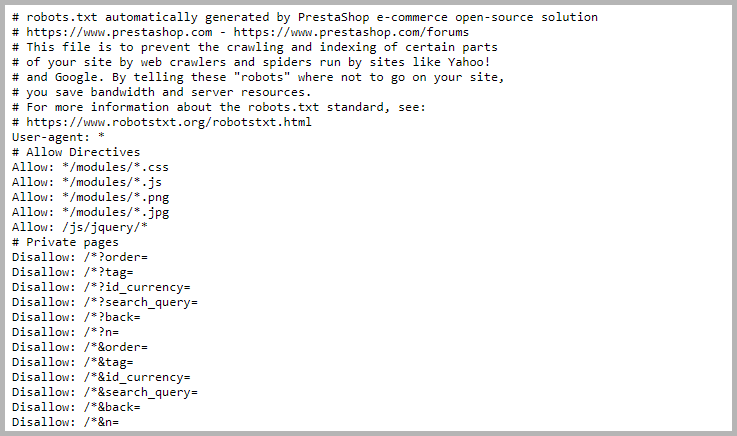

Default Robots.txt in PrestaShop

Luckily for PrestaShop users, a robots.txt file is created automatically during the installation process. It’s set up for the needs of a typical eCommerce site. Most of the time, you won’t need to touch this file unless you have a specific reason.

When You Need to Edit Robots.txt and When You Don’t

There are a few reasons why you might want to edit your Robots.txt file. Here are some examples:

- If you have two or more pages with the same content (duplicates), you might want to tell bots to ignore one to avoid a penalty from search engines.

- If you’ve added new pages or sections to your site, you might want to control how visible they are to search engines.

- Maybe you have pages or files you’d rather keep private (however, keep in mind that instructions in Robots.txt are only recommendations for search engines)

But remember, if you’re just using PrestaShop’s default functions and your SEO is working fine, there’s no need to change your Robots.txt.

Accessing the Robots.txt File

Your robots.txt file lives in the root directory of your PrestaShop site. You can use an FTP client or your hosting file manager to get there to edit it.

To view the file, just type “https://YourSite.com/robots.txt” (your shop URL + “robots.txt”) into your browser’s address bar.

Generating Robots.txt in PrestaShop

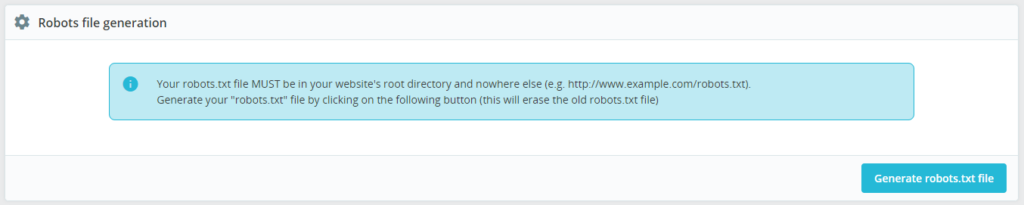

In PrestaShop, you can re-generate Robots.txt file right from the Back Office. Here’s how to do it:

- Go to the “Shop Parameters >> Traffic & SEO” page.

- Find the “Robots file generation” at the bottom.

- Click the “Generate robots.txt file” button.

That’s it, PrestaShop will generate a new robots.txt file. It’s useful if your robots.txt file is missing for some reason or if you edited the file and want to revert the changes.

Customizing Robots.txt

The Robots.txt file is written using a very simple text syntax, making it easy to understand and edit. Each rule in a Robots.txt file consists of two parts: a user-agent line and at least one Disallow line.

Here are some quick examples:

Block everything:

1 2 | User-agent: * Disallow: / |

Block only Googlebot:

1 2 | User-agent: Googlebot Disallow: / |

Block specific directory:

1 2 | User-agent: * Disallow: /private |

Block specific directory except one URL:

1 2 3 | User-agent: * Disallow: /private Allow: /private/public-page |

Robots.txt syntax

User-agent:

This specifies the search engine bot that the rule will apply to. If you want to target all bots, you can use an asterisk (*). For example:

1 | User-agent: * |

This rule applies to all bots.

Disallow:

This is followed by the relative URL path that you want to block from the bots. For example:

1 | Disallow: /private |

This rule tells the bot not to crawl or index the “private” directory.

Allow:

This is used when you want to override a Disallow rule for a specific page or directory within a disallowed directory. For example:

1 | Allow: /private/public-page |

Specific bot

If you want to block a specific bot, you would specify that bot’s name in the User-agent line. For example, if you wanted to block Google’s bot (Googlebot) from indexing a specific directory, you could write:

1 | User-agent: Googlebot |

And then the code for blocking a directory.

Sitemap

You can also provide a link to your sitemap in Robots.txt file:

1 | Sitemap: https://example.com/sitemap.xml |

Testing Robots.txt

After you’ve tweaked your Robots.txt file, test it to make sure it works right. You can use a tool like Google’s Search Console for this – https://www.google.com/webmasters/tools/robots-testing-tool

Or just open it in your browser to see if it looks okay – https://YourSite.com/robots.txt

Common Mistakes

When editing your Robots.txt file, watch out for these common mistakes:

1. Misplacement of the Robots.txt File: The Robots.txt file should always be placed in the root directory. Placing it elsewhere will lead to it being ignored by bots.

2. Unnecessary Use of Trailing Slash: Adding a trailing slash (/) when it’s not needed can lead to misinterpretation by bots. For example, “Disallow: /private/”might not be interpreted the same as “Disallow: /private”.

3. Ignoring Case Sensitivity: URLs are case-sensitive, meaning “/My-Secret-Page” is different from “/my-secret-page”. This applies to directives in your Robots.txt file as well.

4. Blocking All Bots: A rule like “User-agent: *” followed by “Disallow: /” blocks all bots from your entire website. Unless that’s your intention, avoid this setup.

5. Using Allow Directive Incorrectly: The “Allow” directive is not recognized by all bots, so using it as a complete access control measure can lead to problems.

6. Neglecting the Use of Comments: Forgetting to use “#” before comments can cause confusion for bots trying to read the file.

7. Using Noindex in Robots.txt: “Noindex” is a directive for individual web pages and is not recognized in a Robots.txt file.

8. Not Updating the Robots.txt File: Forgetting to update the Robots.txt file as your website evolves can lead to obsolete or incorrect rules.

9. Leaving a Testing Robots.txt Live: Always remember to replace a restrictive testing Robots.txt file with your regular one after you’re done testing.

10. Using Incorrect Syntax: Even small typos in directives like “User-agent” and “Disallow”, or in the paths you enter, can cause significant issues with how bots interpret your Robots.txt file.

Remember, your Robots.txt is a powerful SEO tool. Take the time to set it up right and it can really help your PrestaShop site stand out in the world of eCommerce.